Server

Create Advanced AI Agents with LangChain and RAG Techniques

Artificial intelligence is revolutionizing various industries by automating tasks, analyzing vast amounts of data, and providing insights in ways that were previously unimaginable. Among the cutting-edge approaches in AI, LangChain and RAG (Retrieval-Augmented Generation) techniques have emerged as powerful tools for creating advanced AI agents capable of performing complex tasks. This blog post will introduce you to LangChain and RAG techniques, explore their applications, and guide you through the process of creating advanced AI agents using these tools.

1. What is LangChain?

LangChain is an open-source framework designed to enable developers to create robust language models and advanced AI agents. It allows for the easy integration of large language models (LLMs) with external data sources, APIs, and other tools to perform sophisticated tasks.

LangChain simplifies the process of building applications that require language models to interact with external knowledge, like databases, documents, or APIs. By providing a high-level API, LangChain facilitates the development of chatbots, personal assistants, content generation tools, and more.

Key features of LangChain:

- Memory management: LangChain allows AI agents to remember past interactions and leverage context for more natural conversations.

- Chains: Chains refer to sequences of tasks that LangChain can execute, including calling APIs, querying databases, and processing language model outputs.

- Agents: These are autonomous entities that perform specific tasks by interacting with the world. LangChain helps create AI agents that can be dynamically customized to perform complex multi-step workflows.

LangChain provides an intuitive framework that allows for modular and scalable AI systems, supporting integration with OpenAI’s GPT, Google’s BERT, and other popular LLMs.

2. What is Retrieval-Augmented Generation (RAG)?

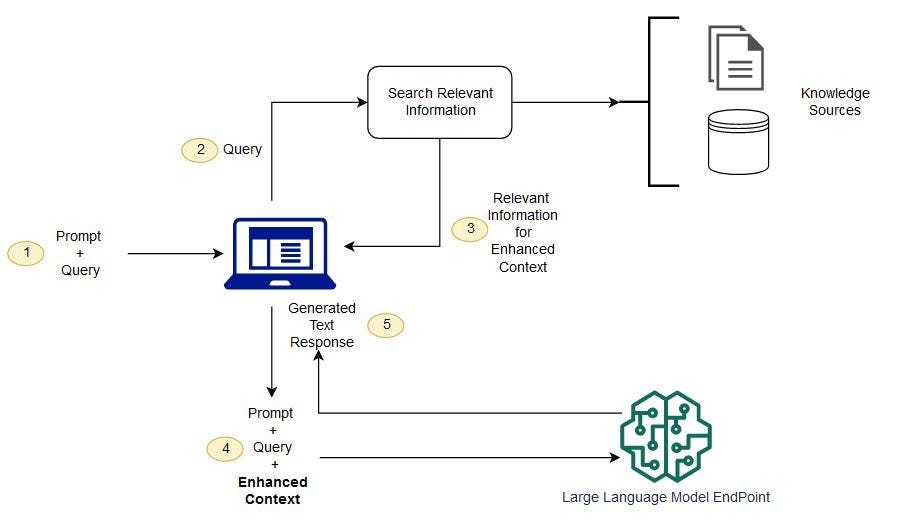

RAG is a technique that enhances language generation by incorporating information from external sources in real-time. It involves using retrieval models to fetch relevant documents or knowledge from a large database or knowledge base and then leveraging generative models (like GPT-3) to generate human-like text that integrates the retrieved knowledge.

The RAG technique addresses a key limitation of traditional language models: their lack of access to external knowledge or documents during the generation process. By augmenting generation with retrieval, RAG enables models to provide more accurate, relevant, and contextually aware responses.

How RAG works:

- Retrieval: The model searches for relevant documents from an external source (like a database, file system, or the web).

- Generation: The model uses the retrieved documents as context to generate a response that is informed by the new knowledge.

This combination of retrieval and generation allows AI systems to create content that is highly relevant to the user’s query and grounded in the most up-to-date information.

3. Building Advanced AI Agents Using LangChain and RAG

Now that we have an understanding of LangChain and RAG, let’s dive into how to combine them to build advanced AI agents that can handle complex tasks like information extraction, conversational AI, and multi-step workflows.

Step 1: Set Up LangChain

To get started, you’ll first need to install LangChain. It’s compatible with both Python and Node.js environments, so ensure you’re working in a suitable environment.

pip install langchain

Once installed, you can start using LangChain to create your AI agents by defining chains of tasks and integrating external APIs or databases.

Step 2: Integrating Retrieval-Augmented Generation (RAG)

Incorporating RAG into LangChain involves integrating a retrieval system, which fetches documents or data, with a generative model. You can use a search engine, database, or a document retrieval system as the retrieval source. The key is to make sure your agent can efficiently query these sources and use the results for better context when generating responses.

Here’s a simplified example of using RAG with LangChain:

from langchain.chains import RetrievalChain

from langchain.agents import initialize_agent

from langchain.llms import OpenAI

from langchain.vectorstores import FAISS

from langchain.embeddings import OpenAIEmbeddings

# Step 1: Create a vector store for document retrieval

documents = ["Document 1: AI is the future", "Document 2: LangChain simplifies AI workflows", "Document 3: RAG enhances language models"]

embeddings = OpenAIEmbeddings()

vectorstore = FAISS.from_texts(documents, embeddings)

# Step 2: Set up the Retrieval Chain using LangChain

retrieval_chain = RetrievalChain.from_documents(vectorstore)

# Step 3: Integrate with a Generative Model (e.g., GPT-3)

llm = OpenAI(model="text-davinci-003")

# Step 4: Build the AI agent that uses both retrieval and generation

agent = initialize_agent(retrieval_chain, llm, agent_type="zero-shot-react-description")

# Query the agent with a question

query = "What is the role of LangChain in AI?"

response = agent.run(query)

print(response)

In this example, LangChain retrieves documents related to the query using the FAISS vector store and then feeds that context into the OpenAI model for generation. The result is a response that is informed by both the query and the external documents retrieved.

Step 3: Handling Complex Tasks with LangChain Agents

LangChain allows you to create agents that can execute more advanced workflows. For example, your AI agent might need to:

- Retrieve external knowledge.

- Analyze the knowledge to extract key information.

- Perform an action based on the knowledge.

With LangChain, you can define custom workflows for such agents using “chains” of tasks. Each chain can represent a step in the workflow, and you can easily orchestrate how agents fetch, process, and generate information.

Here’s an example where an AI agent retrieves articles, processes them, and generates a summary:

from langchain.chains import LLMChain

from langchain.prompts import PromptTemplate

# Create a template for generating summaries

prompt = PromptTemplate(input_variables=["article"], template="Summarize the article: {article}")

chain = LLMChain(llm=OpenAI(model="text-davinci-003"), prompt=prompt)

# Example article text

article_text = "AI is transforming industries by automating tasks, analyzing data, and driving innovation in various sectors."

# Generate a summary

summary = chain.run({"article": article_text})

print(summary)

This AI agent can dynamically retrieve relevant content, process it, and generate a concise summary, all while keeping the process adaptable to various types of content.

4. Real-World Applications of LangChain and RAG Techniques

With LangChain and RAG, you can build powerful AI agents for various applications:

- Intelligent Chatbots and Virtual Assistants: These agents can fetch relevant data from external sources (like documents or APIs) and generate human-like responses based on that data. They can assist users with queries, provide recommendations, and perform tasks like booking appointments or answering customer service inquiries.

- Content Generation: Use LangChain to create agents that generate creative content such as blog posts, articles, or marketing materials. By integrating RAG, these agents can ensure the content is contextually relevant and informed by the latest information.

- Data Analysis and Extraction: Advanced AI agents can analyze large datasets, extract valuable insights, and present them in a human-readable format. For instance, RAG can be used to pull data from multiple sources, and LangChain can orchestrate the analysis and reporting process.

- Personalized Recommendations: Build agents that recommend content, products, or services based on external data sources and user preferences, ensuring the recommendations are grounded in the latest knowledge and trends.

5. Conclusion

LangChain and RAG techniques offer powerful tools for building advanced AI agents that can retrieve knowledge, generate responses, and perform complex workflows. By integrating large language models with external data sources, these agents are capable of producing more informed, accurate, and contextually aware outputs. Whether you’re building chatbots, content generators, or intelligent assistants, LangChain and RAG open up new possibilities for AI development.

As AI continues to evolve, the combination of retrieval and generation will become an increasingly important part of creating smarter, more dynamic AI agents. With LangChain, you have the flexibility to build and deploy these agents with ease, bringing the next generation of AI-powered applications to life.